Been strugling for a while with this problem.

systemd-shutdown not all md devices stopped 1 left

And keeps trying to umount a partition…

I’ve started to google this but found only some references about the github package systemd-shutdown

opened 10:14AM - 14 Sep 20 UTC

closed 09:29PM - 14 Sep 20 UTC

**systemd version the issue has been seen with**

> 246.4-1-arch

**Us… ed distribution**

> Arch Linux

**Expected behaviour you didn't see**

> Dracut shutdown module from initramfs should be executed

**Unexpected behaviour you saw**

> Pivoting to initramfs and executing the "shutdown" binary is not happening. Manual inspection of /run/initramfs shows a clean structure with an executable shutdown binary (shell script).

**Steps to reproduce the problem**

1. Create an initramfs with dracut with the shutdown module

2. Set up a dracut debug shell according to man dracut-shutdown.service

# as root:

mkdir -p /run/initramfs/etc/cmdline.d

echo "rd.break=pre-shutdown rd.shell" > /run/initramfs/etc/cmdline.d/debug.conf

touch /run/initramfs/.need_shutdown

3. systemctl reboot and wait for debug shell, which never appears.

The tmpfs on /run is mounted as such:

tmpfs on /run type tmpfs (rw,nosuid,nodev,size=1629500k,nr_inodes=819200,mode=755)

The shutdown binary is in the right spot and ready for execution:

ls -alh /run/initramfs/shutdown

-rwxr-xr-x 1 root root 3.4K Sep 14 11:45 /run/initramfs/shutdown

The info log from systemd of the shutdown process contains (created via debug.sh in /usr/lib/systemd/system-shutdown/debug.sh)

```

[ 93.416413] audit: type=1334 audit(1600072901.925:125): prog-id=21 op=UNLOAD

[ 209.640808] audit: type=1131 audit(1600073017.801:126): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=accounts-daemon comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.642848] audit: type=1131 audit(1600073017.801:127): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=polkit comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.644317] audit: type=1131 audit(1600073017.804:128): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=colord comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.645760] audit: type=1131 audit(1600073017.804:129): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=alsa-restore comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.648176] audit: type=1131 audit(1600073017.808:130): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=kexec-load@linux comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=failed'

[ 209.714209] audit: type=1131 audit(1600073017.874:131): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=lvm2-pvscan@8:33 comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.716850] audit: type=1131 audit(1600073017.874:132): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=lvm2-pvscan@8:34 comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.719419] audit: type=1131 audit(1600073017.878:133): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=lvm2-pvscan@8:35 comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.721116] audit: type=1131 audit(1600073017.881:134): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=lvm2-pvscan@8:36 comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 209.778645] XFS (dm-5): Unmounting Filesystem

[ 209.876761] audit: type=1131 audit(1600073018.034:135): pid=1 uid=0 auid=4294967295 ses=4294967295 msg='unit=org.cups.cupsd comm="systemd" exe="/usr/lib/systemd/systemd" hostname=? addr=? terminal=? res=success'

[ 210.262189] EXT4-fs (sda3): mounting ext2 file system using the ext4 subsystem

[ 210.296726] EXT4-fs (sda3): mounted filesystem without journal. Opts: (null)

[ 212.207102] XFS (dm-9): Unmounting Filesystem

[ 212.458003] watchdog: watchdog0: watchdog did not stop!

[ 212.853134] systemd-shutdown[1]: Syncing filesystems and block devices.

[ 213.729463] systemd-shutdown[1]: Sending SIGTERM to remaining processes...

[ 213.908456] systemd-shutdown[1]: Sending SIGKILL to remaining processes...

[ 213.914647] systemd-shutdown[1]: Hardware watchdog 'SP5100 TCO timer', version 0

[ 213.916036] systemd-shutdown[1]: Unmounting file systems.

[ 213.917859] [1796]: Remounting '/' read-only in with options 'attr2,inode64,logbufs=8,logbsize=32k,noquota'.

[ 214.480674] systemd-shutdown[1]: All filesystems unmounted.

[ 214.480681] systemd-shutdown[1]: Deactivating swaps.

[ 214.480778] systemd-shutdown[1]: All swaps deactivated.

[ 214.480783] systemd-shutdown[1]: Detaching loop devices.

[ 214.481008] systemd-shutdown[1]: All loop devices detached.

[ 214.481013] systemd-shutdown[1]: Detaching DM devices.

[ 214.484329] systemd-shutdown[1]: Detaching DM /dev/dm-9 (254:9).

[ 214.530873] systemd-shutdown[1]: Detaching DM /dev/dm-8 (254:8).

[ 214.553889] systemd-shutdown[1]: Detaching DM /dev/dm-7 (254:7).

[ 214.583873] systemd-shutdown[1]: Detaching DM /dev/dm-6 (254:6).

[ 214.623866] systemd-shutdown[1]: Detaching DM /dev/dm-5 (254:5).

[ 214.650544] systemd-shutdown[1]: Detaching DM /dev/dm-3 (254:3).

[ 214.650598] systemd-shutdown[1]: Could not detach DM /dev/dm-3: Device or resource busy

[ 214.650606] systemd-shutdown[1]: Detaching DM /dev/dm-2 (254:2).

[ 214.650624] systemd-shutdown[1]: Could not detach DM /dev/dm-2: Device or resource busy

[ 214.650630] systemd-shutdown[1]: Detaching DM /dev/dm-1 (254:1).

[ 214.650646] systemd-shutdown[1]: Could not detach DM /dev/dm-1: Device or resource busy

[ 214.650653] systemd-shutdown[1]: Detaching DM /dev/dm-0 (254:0).

[ 214.677183] systemd-shutdown[1]: Not all DM devices detached, 4 left.

[ 214.677506] systemd-shutdown[1]: Detaching DM devices.

[ 214.678993] systemd-shutdown[1]: Detaching DM /dev/dm-3 (254:3).

[ 214.679019] systemd-shutdown[1]: Could not detach DM /dev/dm-3: Device or resource busy

[ 214.679026] systemd-shutdown[1]: Detaching DM /dev/dm-2 (254:2).

[ 214.679044] systemd-shutdown[1]: Could not detach DM /dev/dm-2: Device or resource busy

[ 214.679049] systemd-shutdown[1]: Detaching DM /dev/dm-1 (254:1).

[ 214.679066] systemd-shutdown[1]: Could not detach DM /dev/dm-1: Device or resource busy

[ 214.679072] systemd-shutdown[1]: Not all DM devices detached, 4 left.

[ 214.679078] systemd-shutdown[1]: Cannot finalize remaining DM devices, continuing.

```

I reported this to systemd, because from my point of view all the requirements from systemd's documentation are fulfilled, e.g. the initramfs is correctly unpacked and contains the shutdown "binary" to get further work done. If you feel that I should report this to the package maintainers first, please say so and will file reports there as well.

Could it be that the can_initrd variable if false for some reason?

https://github.com/systemd/systemd/blob/v246/src/shutdown/shutdown.c#L510

I'd be happy to provide further information.

Thank you!

Luckyly I’ve notice on another installation, that the first time the server acts as expected rebooting normally. But after an upgrade was done the system started to act like mentioned before.

With this two dots i’ve tryed to draw a line … and guess that there was a package that was upgraded that could be creating the conflict.

The first upgrade I did in a minimal install, upgraded kernel, kernel-tools, and other stuff non related. I decided to go after kernel-tools.

I’ve found that kernel-tools upgrade from version 1 to 1.0.1.pkgs.org

search for kernel-tools-5.14.0-162.6.1.el9_1.x86_64.rpm and kernel-tools-libs-5.14.0-162.6.1.el9_1.x86_64.rpm

After downgraded those packages the system could restart as expected.

Conclusion : NOTE that 1.0.1 package has this problem with RAID managed with mdadm, stand to version 1.

Hope this help others.

Regards

1 Like

I had the same problem with a new installation of RL9.1 using soft raid.

Sometimes it takes more time to shutdown sometimes less time, but the odd behavior is if I type crtl+alt+f2 to open a new console it stops the loop and shutdown immediately.

About the loop you are seeing, does it look similar to this?

When I run shutdown, I run into the following infinite loop:

watchdog: watchdog0: watchdog did not stop!

block device autoloading is deprecated and will be removed.

block device autoloading is deprecated and will be removed.

block device autoloading is deprecated and will be removed.

block device autoloading is deprecated and will be removed.

block device autoloading is deprecated and will be removed.

block device autoloading is deprecated and will be removed.

blkdev_get_no_open: 277 callbacks …

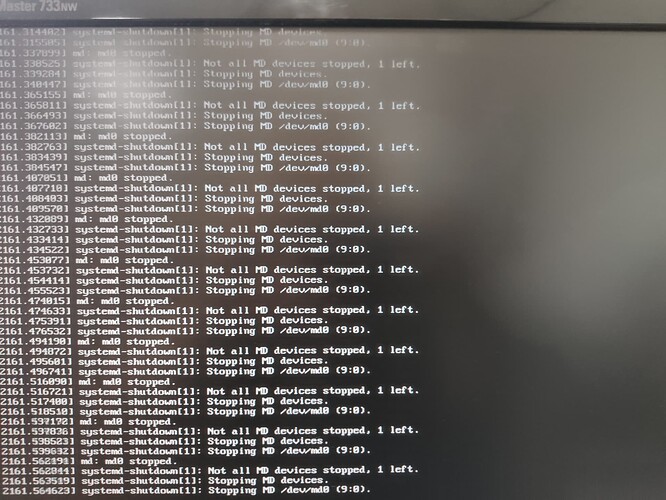

Hi toracat, the loop I see is like this:

Just correcting myself, I have this problem (loop trying stop MD devices) in version kernel-tools-5.14.0-162.6.1.el9_1.x86_64.rpm

Well, this seemed to work as said in first post until yesterday.

Confidently sent a init 6 but the system keep looping while spiting those …

Not all MD devices stopped. 1 left

stopping md devices

stopping md /dev/mdxxx

So the “solution” it is not a solution…

By the way … tryied with the Ctrl+Alt+Fx and didn’t work

Could you find any solution? Did you install 9.0 and updated it or did you install 9.1?

I just installed RL9.0 and version of kernel, kernel-tools, etc is 5.14.0-70.13.1.el9_0 and there is no problem with md devices or shuting down the system.

I tried again 9.1 did an update and the kernel related packages are now 5.14.0-162.6.1.el9_1.x86_64 but the problem stopping md devices continues.

toracat

February 22, 2023, 7:29pm

10